Logic Models — a roadmap for measuring impact in EdTech

With the increased focus on properly understanding and measuring impact within the education technology industry, we wanted to share how Sown To Grow approaches this in a deliberative, iterative way.

Today we’ll share an overview of how logic models can be a guide for both impact research and product development. Then in the coming months, we’ll dive into details on (1) the underlying learning science and pedagogy behind our logic model, (2) how we have used it to understand the usability and feasibility of our product, and (3) our previous and upcoming efficacy studies.

What is a logic model?

In education, logic models are a tool for describing the theory of action behind your product — what will be the inputs (user actions, technology scaffolds, etc.) that will cause a desired set of outcomes. Think of them as a hypothesis to be validated.

Our logic model consists of interrelated educator and student actions as they use Sown To Grow (and how we help facilitate those actions). Based on these actions, we’ve laid out anticipated intermediate and long-term outcomes, namely, that students build critical social-emotional learning competencies and demonstrate increased long-term student achievement.

This logic model is at the center of everything we do. It not only guides how we think about and design our product; it also provides a critical map for our research to measure whether we are achieving the desired impact and where we need to focus to improve.

The logic model as a guide to researching impact

While creating a logic model is a great first step in thinking about the potential impact of your product, there are multiple steps along the journey to validating this impact. We’ll provide an overview of our approach here, then dive deeper in future posts.

Early Stage: Literature Review

An important early first step is to make sure your logic model aligns with the latest in learning science and pedagogy research. While you can do this yourself, it is extremely helpful to have an independent education researcher review your logic model and the underlying research as well. This helps avoid confirmation bias, and also can be a valuable resource for discovering new research and making adjustments to the model.

For example, while our initial logic model had a foundation in research on growth mindset, an external independent review helped us expand to research on the self-regulatory process, the importance of teacher-student relationships, and student voice. This led to improvements to not only the logic model, but the approach we took with our product.

Here is an example of a literature review conducted in the early stages of Sown To Grow’s development.

Mid Stage: Usability / Feasibility Studies

Mid stage validation should be focused on determining whether your product is able to be used as intended (ie, are each of the steps in the logic model possible for the intended user in the intended setting). This consists of two steps — usability studies and feasibility studies.

Usability studies seek to understand whether the intended users are able to take the actions laid out in your logic model using the product you’ve designed / developed. They usually occur in “controlled” settings with a clickable design prototype or an early iteration of the product (or feature), and typically consist of a UX Designer or Researcher observing a user completing a task analysis then conducting an open-ended interview afterwards. They are a great resource for iterating / improving your design or early product.

Feasibility studies are aimed at understanding whether the intended user is able to successfully take the actions laid out in the logic model in the intended setting. These need to occur in real-world settings; it is more of a test than usability studies, and are significantly more rigorous and time consuming. A feasibility study consists of implementing the intended protocol / intervention using your product over a defined period of time, then conducting a mix of observations, interviews, and surveys to understand what went well and where there are gaps. It is useful to include an independent researcher with feasibility studies to remove confirmation bias–these studies are also useful to share with potential users, so this can also increase confidence.

You can view several feasibility studies conducted on Sown To Grow with independent researchers on our research and impact page.

Later Stage: Efficacy Study

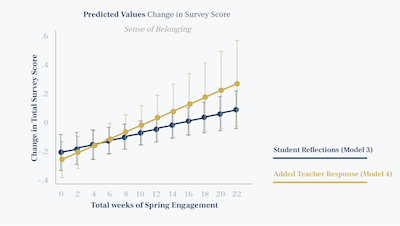

Once you have a product that you know is feasible to be implemented in the intended context, you can begin to measure whether this implementation leads to the outcomes laid out in your logic model. This requires you to run more robust efficacy studies to measure these outcomes. There are multiple different flavors of studies, including single case design, correlation studies, quasi-experimental studies, and randomized control trials. While quasi-experimental studies and randomized control trials require more effort, time, and money to run, they are much more likely to result in reliable results.

One important thing to remember with efficacy studies–you can’t always extrapolate results to other contexts or student populations. For example, an efficacy study that shows your product is effective in a classroom setting does not mean your product will be effective in an asynchronous remote learning environment.

Using your logic model and research to inform product decisions

Our logic model, particularly the educator and student actions, guide our product design and decision-making. And the different research steps provide important feedback loops. For example, a usability study might identify confusing user flows that necessitate a redesign, or feasibility studies might expose gaps that need to be filled with new features.

Good education technology products don’t follow a linear development path. We try to take an iterative approach to development–with cycles of product development followed by research followed by product development, all guided by the framework of our logic model.

Remember, the different types of studies to validate your logic model aren’t the end game. If they don’t return the results you were hoping for, it does not mean that you should quit working and shut down your product. However, it also does not mean that you should just stick your head in the sand and continue on as before. These studies are an invaluable tool to understand what is working well, but more importantly, where you need to spend time adjusting, shoring up, or even completely revamping your product for you to drive the desired impact!

.png)

.png)

.png)